Table of Contents

Table of Contents

Key Points

- Artificial intelligence (AI) is far from new, but the launch of ChatGPT in November 2022 created widespread awareness of AI’s potential for the K–12 education sector.

- The Association of Christian Schools International (ACSI) conducted a survey of its members in the fall of 2023 to gain insight into ACSI school policies and instruction about AI, how educators are using AI in teaching and learning, and educators’ perceptions about the benefits, drawbacks, and future of AI in education.

- The survey found that about one third of Christian school educators—that is, leaders and teachers—reported that their school used AI in teaching and learning (predominantly in high school grades), and that most educators have low levels of familiarity, usage, and confidence when it comes to AI technology.

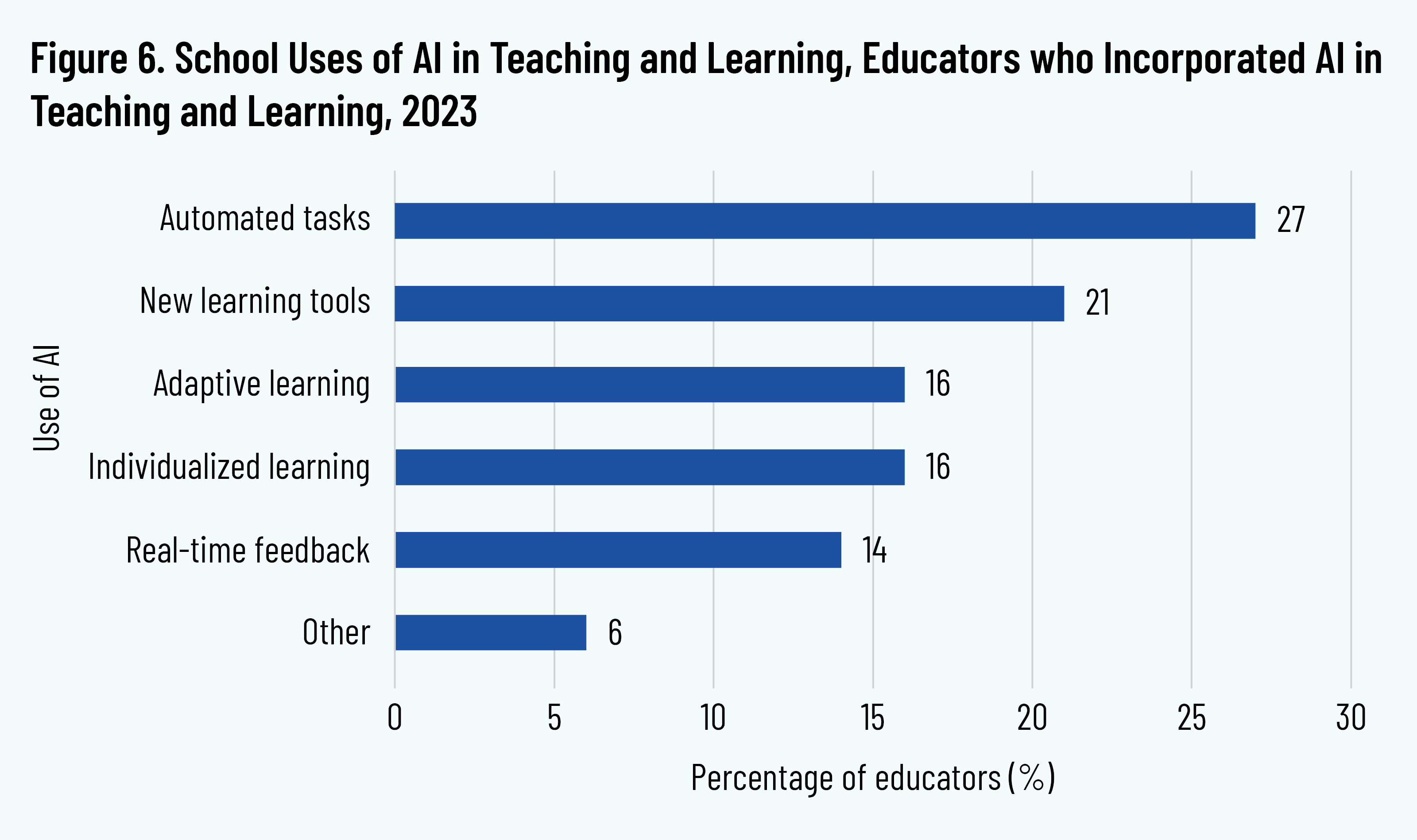

- At Christian schools that incorporated AI in instruction, the top four uses were 1) automated tasks, such as generating essay prompts or lesson plan ideas, 2) new learning tools, such as AI powered games, 3) adaptive learning, providing students with more or less challenging materials depending on their performance, and 4) individualized learning, in which AI-powered tutoring programs tailor instruction to students’ individual needs.

- Overall, a large percentage of surveyed Christian school educators believed that AI could help them save time and effort as well as develop more effective curriculum and lesson plans. However, they are concerned that AI will have a negative impact on students’ integrity (cheating), learning (hindering the development of creative and critical thinking skills), safety (potential hacking or monitoring), and faith development.

- Early adopters of AI in Christian schools tended to be located in urban settings and at schools with missional versus covenantal admissions policies (missional schools do not require parents to attend church or sign a statement of faith). School leaders also tended to be more favorable about AI than teachers.

- Christian schools’ unique missions—including their theological views and educational philosophies—should inform the question of whether or how to adopt new AI technologies. Collaborative and schoolwide dialogue can be used to explore the opportunities and challenges that AI poses. To this end, this report concludes with some suggested discussion prompts.

Introduction

Artificial intelligence (AI) is far from new, with AI already embedded in technologies such as smartphone speech-to-text, digital assistants (such as Siri or Alexa), and GPS-enabled maps or routing apps. It was the launch of ChatGPT in November 2022, however, that created widespread awareness of AI’s potential for the K–12 education sector. ChatGPT gained attention because it was the first AI tool that successfully “positioned itself as a disruptive technology that is revolutionising the way students are taught, promoted, and supported in academic environments.” 1 1 M. Montenegro-Rueda et al., “Impact of the Implementation of ChatGPT in Education: A Systematic Review,” Computers 12, no. 8 (2023): 2, https://doi.org/10.3390/computers12080153.

ChatGPT and similar tools are unlike previous AI applications. First, they are generative, using large language models to create new content (such as text or images) based on patterns present in the data they draw upon. Further, chatbot tools are designed to simulate conversation with human users, typically through text-based interfaces. These tools use various techniques, including rule-based systems, machine learning algorithms, and natural language processing, to understand user input and generate appropriate responses. Put simply, these tools are conversational, draw upon nearly limitless data, and can easily (if not always accurately) perform tasks such as writing essays or providing answers to homework questions—thereby imbuing them with the potential to affect education in significant if not radical ways. Educators around the world reacted to ChatGPT’s launch with a range of responses, from implementing bans and installing detection software to embracing ChatGPT and similar tools in day-to-day teaching and learning. 2 2 C. Gordon, “How Are Educators Reacting to ChatGPT?,” Forbes, April 30, 2023, https://www.forbes.com/sites/cindygordon/2023/04/30/how-are-educators-reacting-to-chat-gpt/.

Against this backdrop, and to understand its members’ responses to AI, the Association of Christian Schools International (ACSI) conducted a member survey in late 2023 regarding AI usage and perceptions. 3 3 Throughout this report, “AI,” “ChatGPT,” and “chatbots” are used interchangeably to refer to this new class of AI tools that are generative, work from large language models, and are conversational (use natural language processing). ACSI is the largest Protestant Christian school association, with close to 5,500 member schools around the world, 2,300 of which are in the United States. ACSI membership is diverse in terms of school size (by enrollment), structure (independent or church-sponsored), admissions practices, and urbanicity.

The results of the survey provide insight into ACSI schools policies and instruction about AI, how educators are using AI in teaching and learning, and educators’ perceptions about the benefits, drawbacks, and future of AI in education. This report will unpack the survey findings, to provide a descriptive look at the state of AI adoption in these schools and to create a profile of early adopters of AI in these schools. The report concludes by providing a framework with reflection questions for Christian school educators as they consider whether or how to adopt AI in their settings.

Survey Methodology

ACSI fielded an electronic survey on AI in early November 2023 for two weeks. The target participants included school leaders (heads of school, principals, vice principals, deans, etc.), teachers, and staff. The invitation to take the survey was sent by email to all individuals on two email lists that ACSI maintains (one for teachers and one for leaders). The survey was sent to a total of 16,797 individuals, and 705 responded, with a response rate of 4.2 percent. The completion rate for those who responded was 70 percent. Unless otherwise noted, all reported results in figures are from these survey respondents.

Respondent Demographics

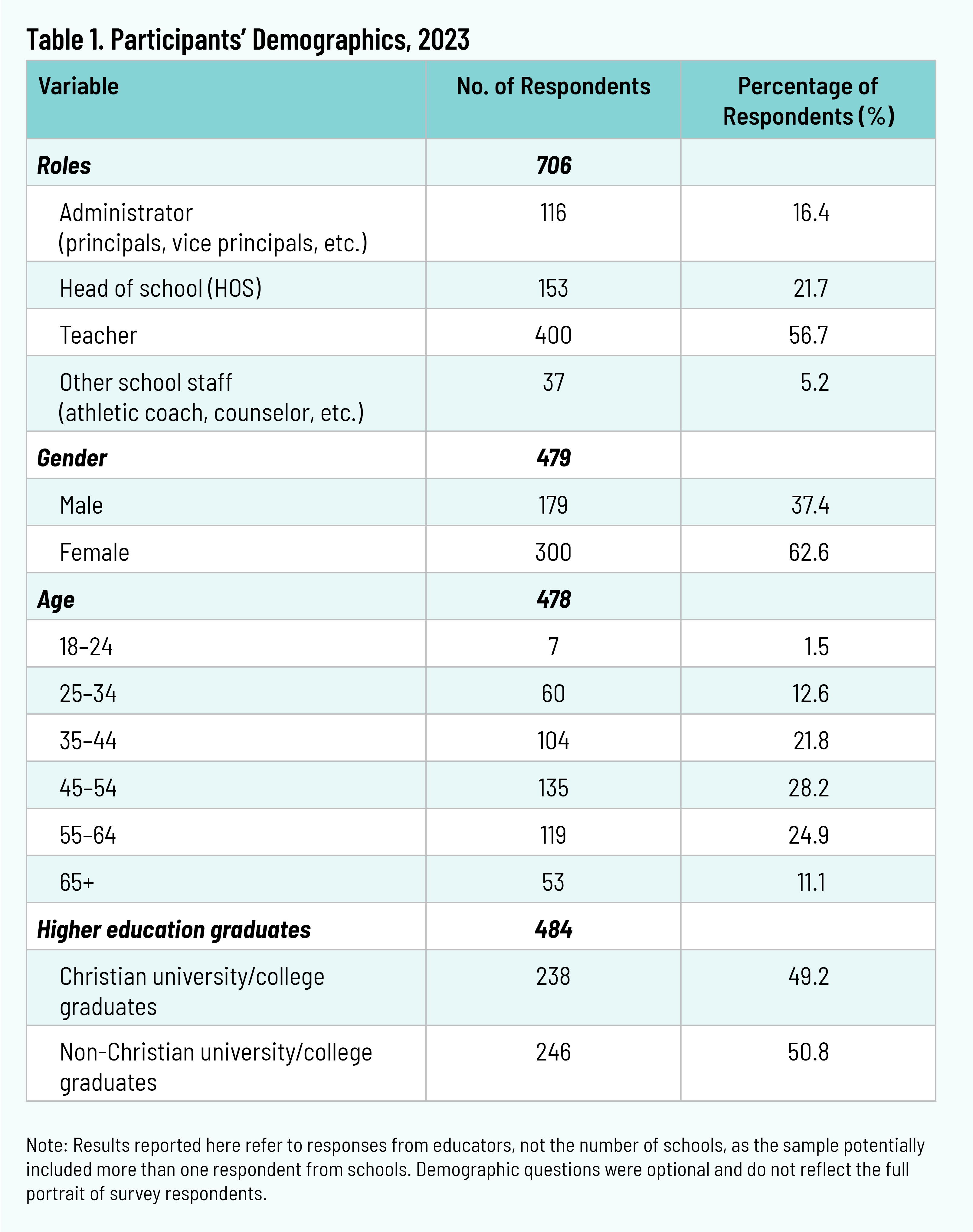

The majority of respondents were teachers (57 percent), followed by heads of school (22 percent), administrators (principals, vice principals, etc.) (16 percent), and other school staff that included counselors, coaches, and administrative staff (5 percent). Respondents were predominantly female, and the majority of respondents’ ages fell in the range of 45–54 years. Respondents were almost evenly split between those who had graduated from Christian colleges or universities (49 percent) and those who had graduated from public or private non-sectarian colleges or universities (51 percent). Eighty-seven percent of respondents were in the US, and 13 percent were in thirty-four other countries.

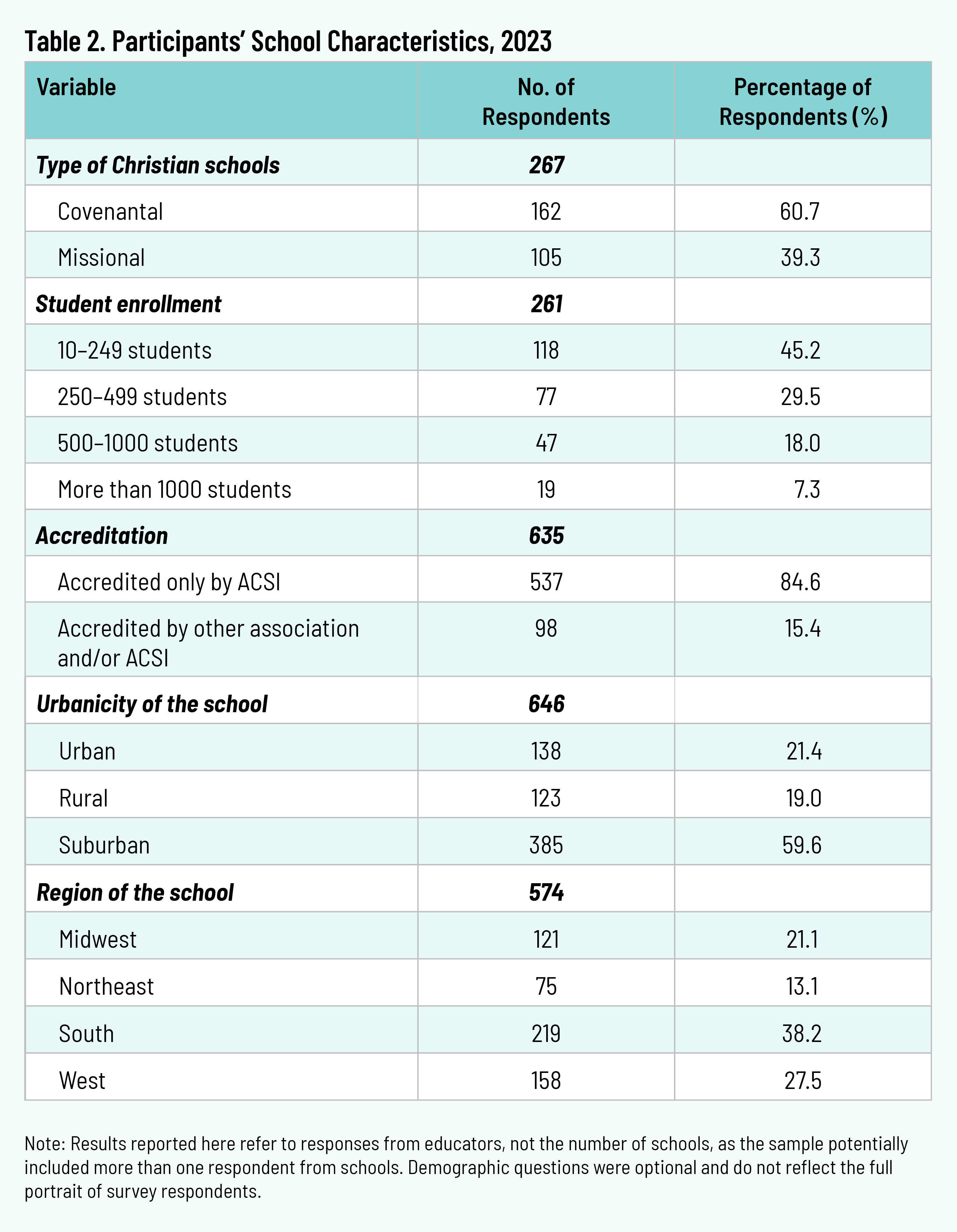

As for school characteristics, 61 percent of the respondents worked at covenantal schools (meaning that the school requires parents to be active members of a church and/or to sign a statement of faith upon the admission of their children to the school), 45 percent worked in a school with an enrollment below 250 students, 60 percent worked in a school located in a suburban area, and 38 percent worked in a school in the southern part of the US. Detailed demographics for the sample are provided in tables 1 and 2.

Data Analysis

Two analytical approaches are used for this report. First, descriptive analyses are provided for the survey questions. Following the methodology of a previous Cardus report by Cheng et al., this first analysis did not control for demographic or school characteristics. 4 4 A. Cheng, R. Djita, and D. Hunt, “Many Educational Systems, A Common Good: An International Comparison of American, Canadian, and Australian Graduates from the Cardus Education Survey,” Cardus, 2022, https://www.cardus.ca/research/education/reports/many-educational-systems-a-common-good/. This approach seeks to generate baseline descriptive data.

Next, simple regression was used to explore possible relationships among outcome variables, by controlling for respondents’ demographic and school characteristics. While causality cannot be identified through this analysis, it provides a picture of the correlations that exist between certain respondent characteristics and higher rates of AI adoption.

Key Findings

This section first provides descriptive results for Christian school educators (leaders and teachers) 5 5 The term “educators” refers throughout the report to both leaders and teachers. Results for each group are reported separately only when the difference is greater than ten percentage points. organized by three themes: educator use of AI, school responses to AI, and educator perceptions relating to AI. Next, using findings from regression analysis, correlational data is used to paint a picture of early adopters of AI in these schools.

Educator Use of AI

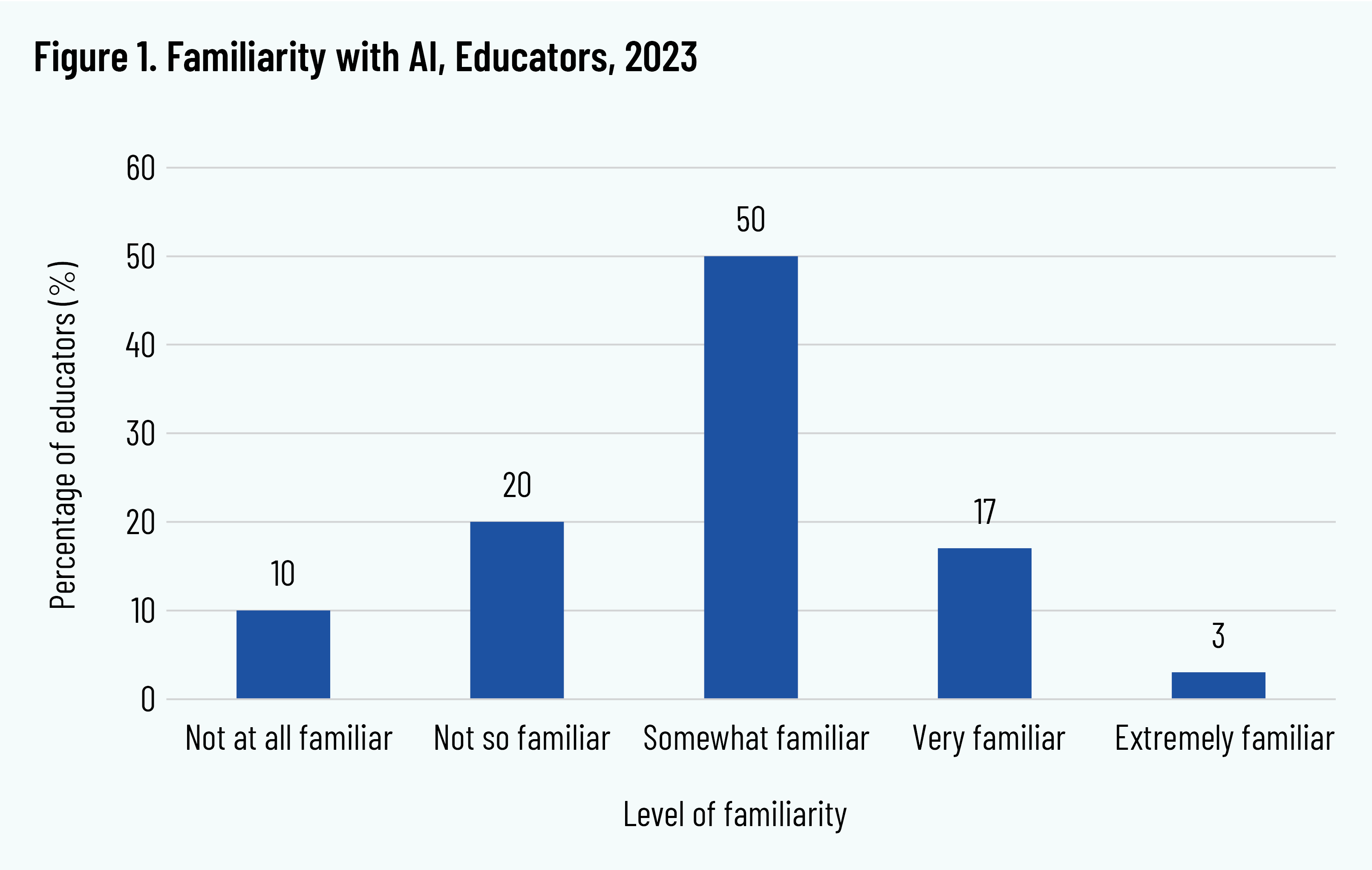

Overall, the survey data regarding educators’ use of AI suggest that the respondents are in the beginning stages of engaging with AI technology. First, respondents were asked about their level of familiarity with AI chatbots and related tools. While a majority of educators (50 percent) indicated they were somewhat familiar, close to a third (30 percent) were not familiar, and 20 percent were very or extremely familiar (figure 1).

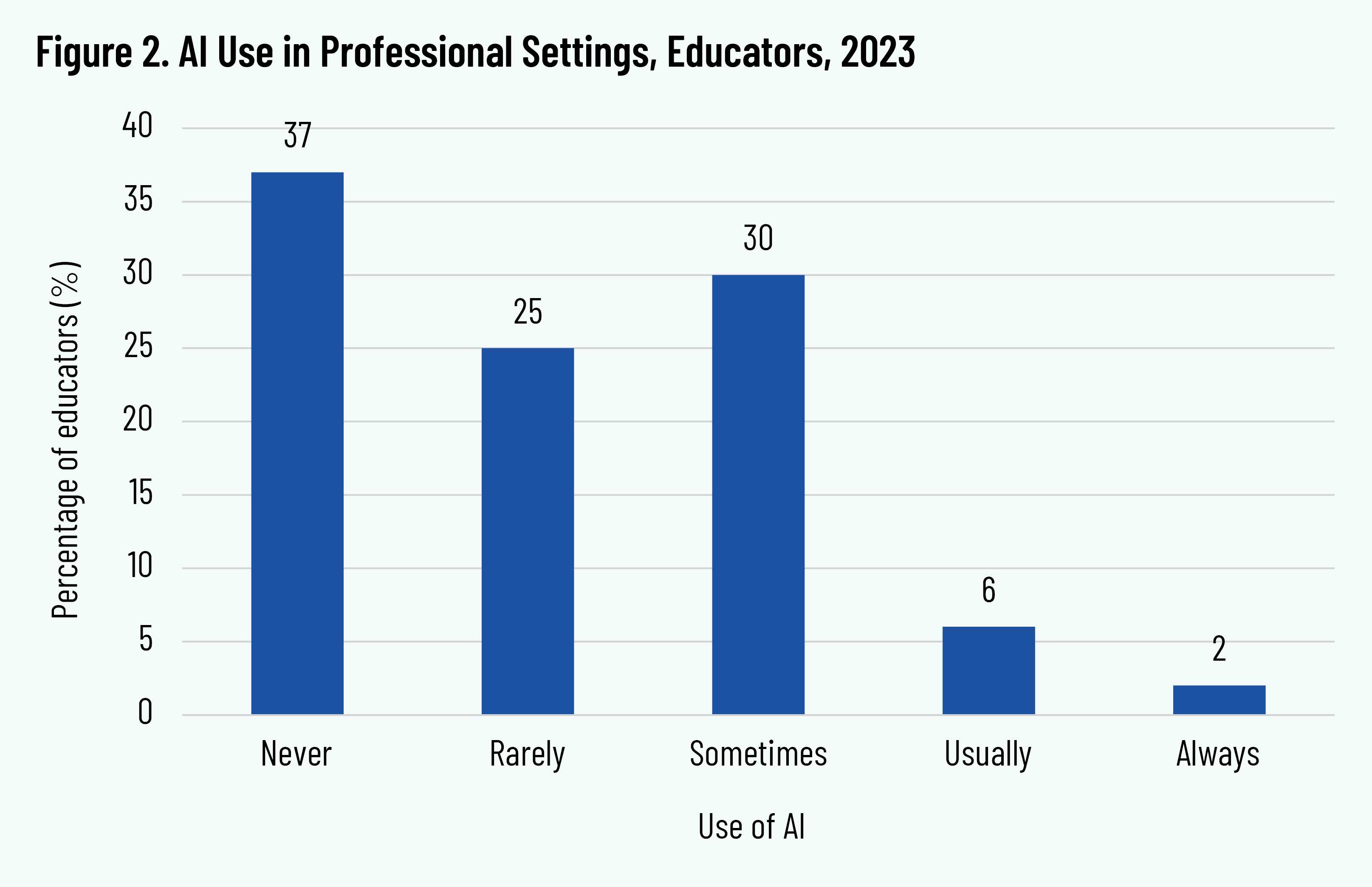

The survey then asked respondents how frequently they use AI in their work. Over a third of educators (37 percent) reported that they had never used AI in their work, and a quarter (25 percent) reported using the technology rarely. Less than a third (30 percent) reported using AI sometimes, and 8 percent reported using it usually or always. Compared with their familiarity with AI, the current state of usage among these educators skews heavily toward non- or infrequent use (figure 2).

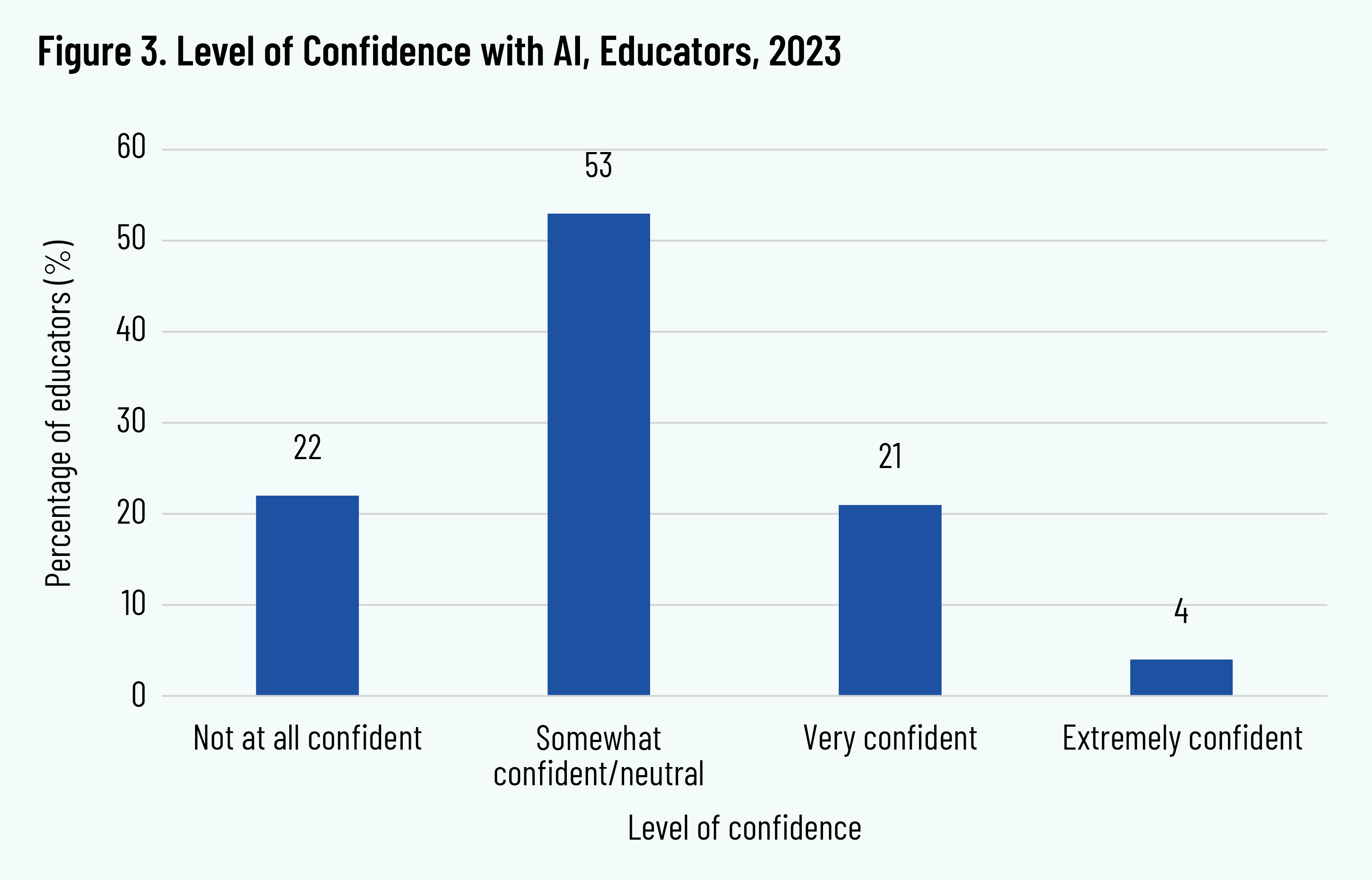

Similar to educators’ level of use, their level of confidence with AI also skews toward the low side, with 25 percent of educators reporting being very or extremely confident in using AI chatbots or tech tools effectively (figure 3).

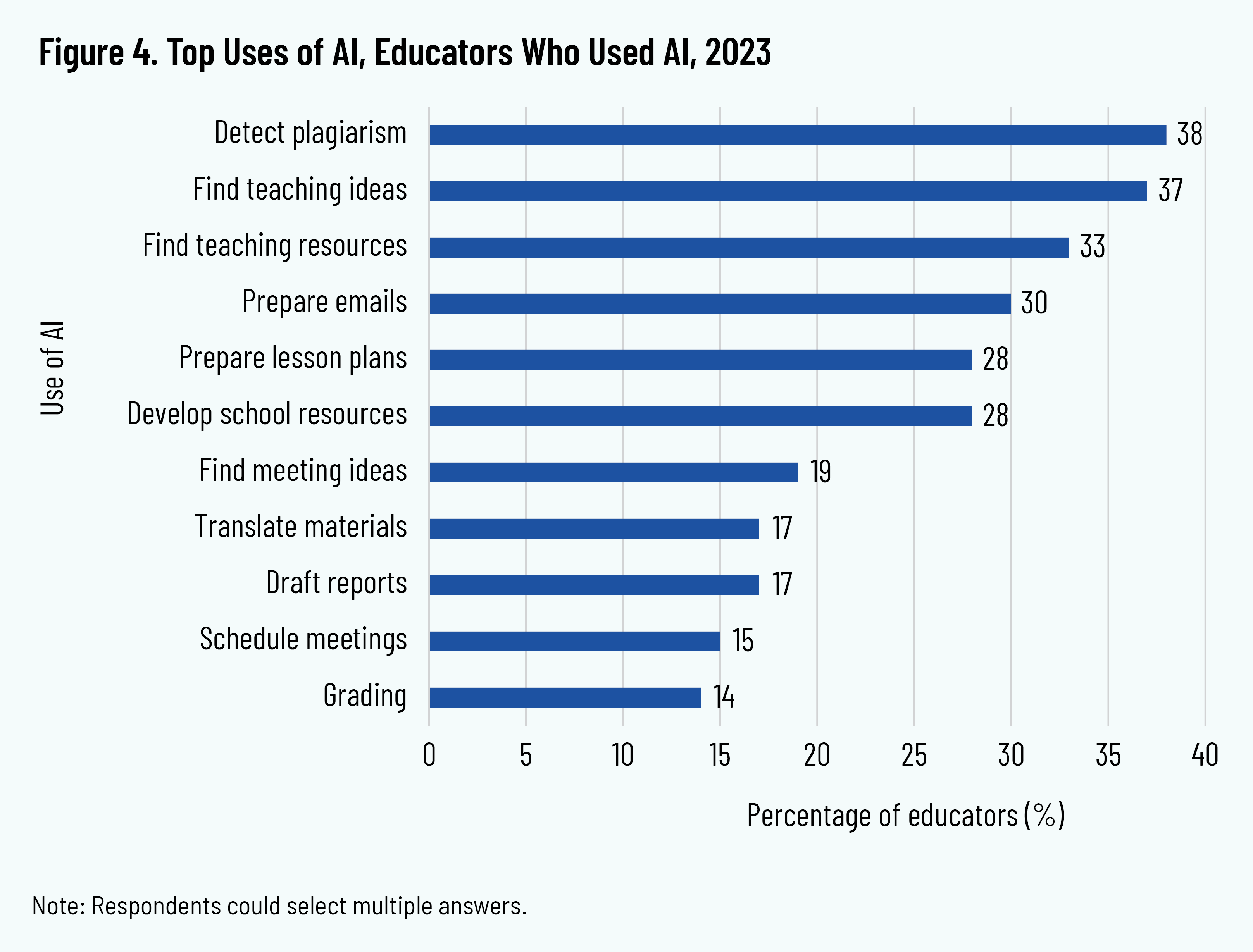

The survey then asked those educators who reported using AI with any frequency in their work (63 percent of the total sample) to select from a provided list all of the ways in which they use the technology. The top five ways that educators reported using AI in their work were to detect plagiarism (38 percent), find teaching ideas (37 percent) and teaching resources (33 percent), prepare emails (30 percent), and prepare lesson plans (28 percent). Other usage included developing school resources (28 percent), finding meeting ideas (19 percent), translating materials (17 percent), drafting reports (17 percent), scheduling meetings (15 percent), and grading (14 percent) (figure 4).

School Responses to AI

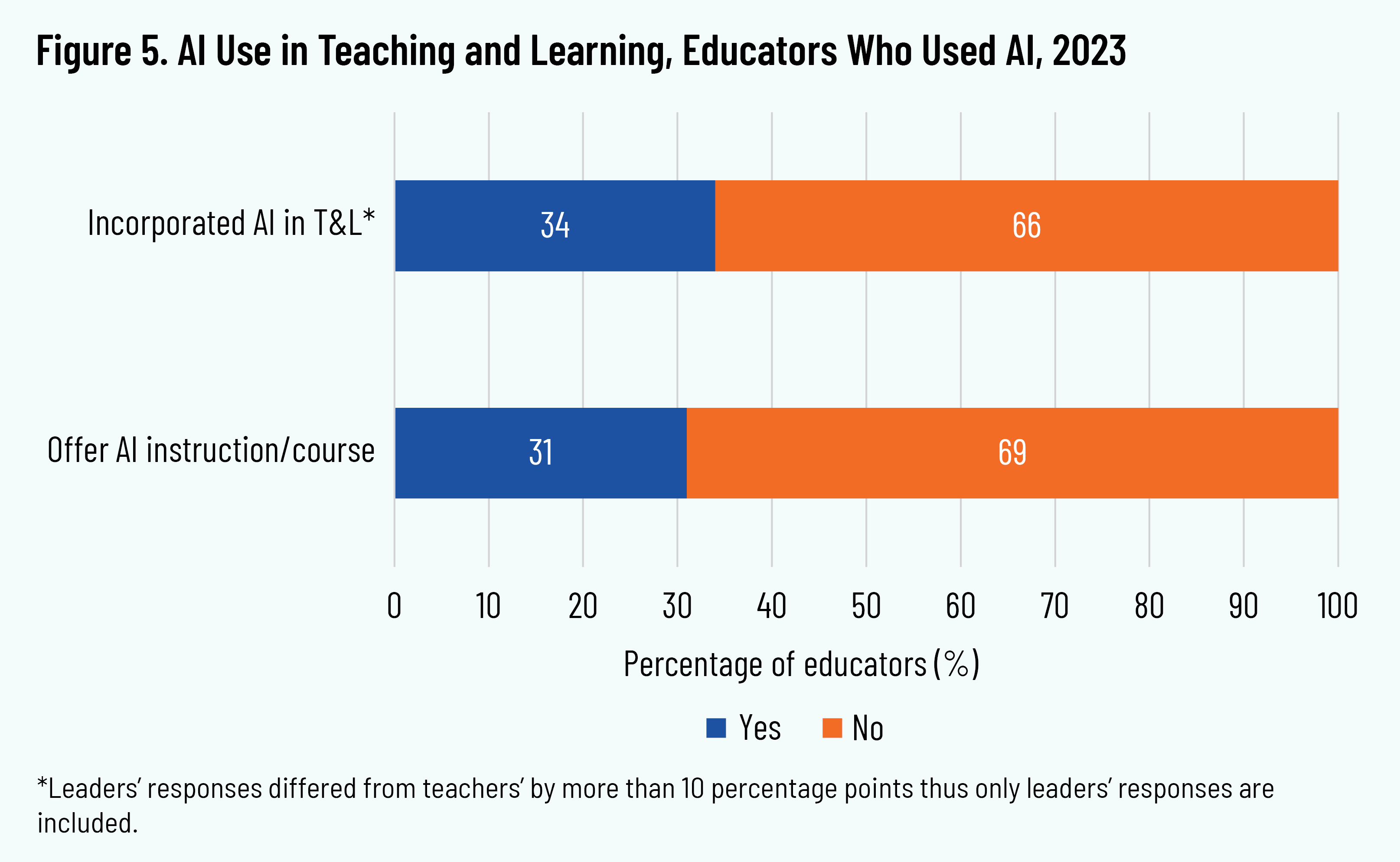

The survey educators who used AI with any frequency about how their schools were responding to the advent of AI technology. 6 6 The percentages reported here refer to the percentage of educators, not percentage of schools, as the sample potentially included more than one respondent from some of the schools. About a third (34 percent) of respondents reported that their school was using AI in teaching and learning, and less than a third (31 percent) of respondents reported that their schools offered some kind of AI-specific instruction or course for students (figure 5). 7 7 For this and other figures, an asterisk (*) denotes that leaders’ responses differed from teachers’ by more than 10 percentage points, and that only leaders’ responses are provided, with the rationale that they have a better vantage point of schoolwide incorporation of AI than individual classroom teachers do.

Respondents whose schools were incorporating AI instruction were asked to select from a provided list all the ways in which their schools were using AI. The top four uses were 1) automated tasks, such as providing teachers with various prompts for essays or creating lesson plans, 2) new learning tools, such as AI-powered games or other interactive games, 3) adaptive learning, providing students with more or less challenging materials depending on their performance, and 4) individualized learning, in which AI-powered tutoring programs tailor instruction to the individual needs of students (figure 6).

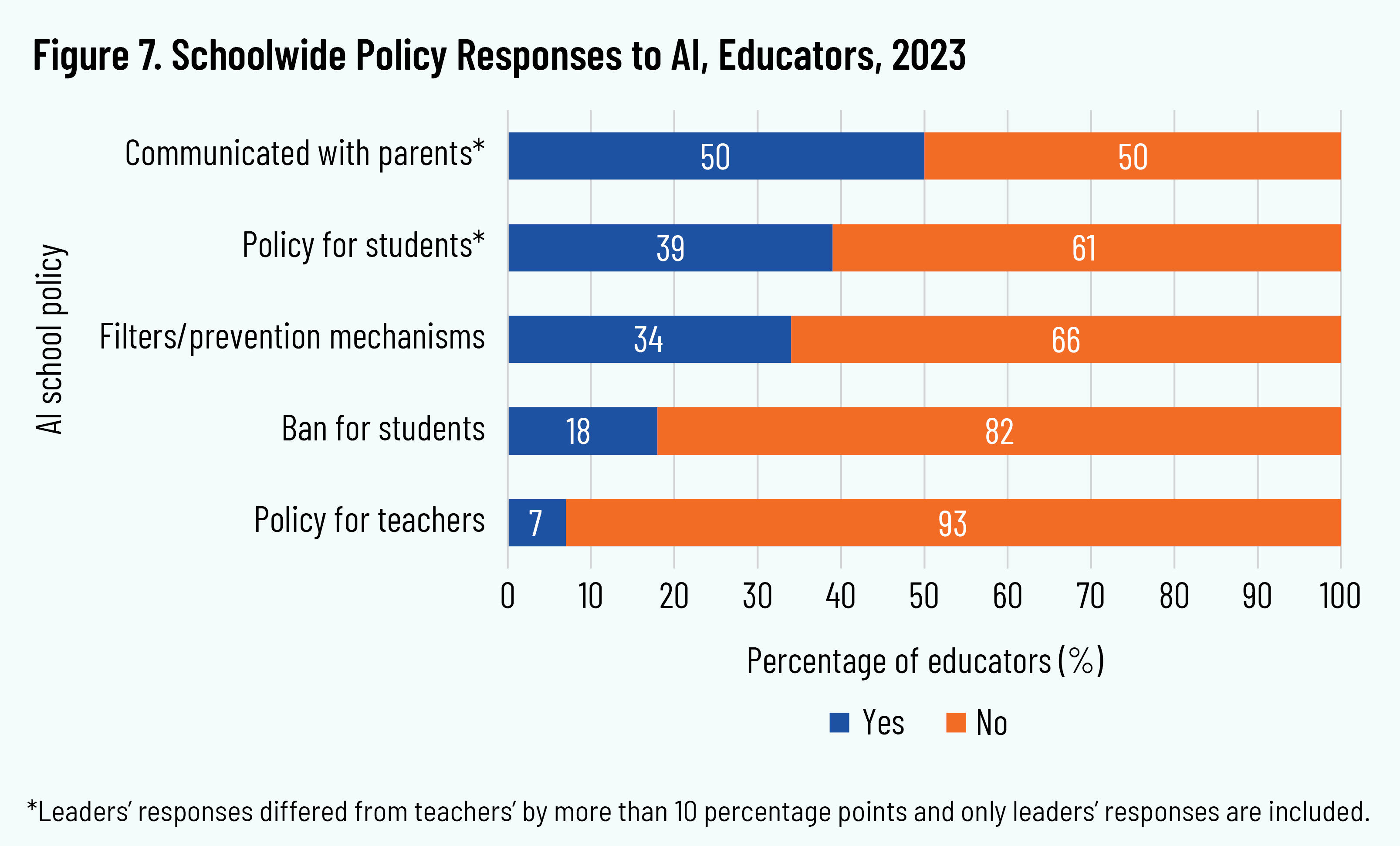

The survey also asked respondents whether their school had implemented policies about AI use, banned its use, installed filters or mechanisms to prevent AI use, or communicated about AI with parents. Thirty-nine percent of respondents indicated that their school had implemented an AI policy for students, 18 percent indicated that their school had banned student use of AI outright, and 34 percent said that their school had installed filters or other mechanisms to prevent student AI use. Schools have addressed teacher use of AI far less, with 7 percent of respondents indicating that their school had implemented an AI policy for teachers. As for communicating about AI with parents, half of the respondents (50 percent) indicated that their school had communicated with parents about AI in some way (figure 7).

Educator Perceptions of AI

The survey asked the respondents about their perceptions of the potential benefits, risks, and applications of AI in Christian schools. Although the responses cannot be assumed predictive, current perceptions can help consider possible future trends in AI adoption.

Overall, when asked how supportive they were of using AI in their school, 29 percent of respondents reported being supportive or very supportive, 40 percent were neutral, and 31 percent were unsupportive. Since responses were based on respondents’ current level of familiarity with AI, it is possible that this distribution in level of support may change as familiarity grows.

The percentage of educators who were unsupportive of AI use (31 percent) is close to the percentage of educators who responded “no” when asked whether AI can be used in ways that are compatible with biblical views. If these are the same respondents (a possibility that was not explored in this analysis), then the unsupportiveness may be due to the belief that AI is incompatible with biblical views, rather than due to other possible reasons.

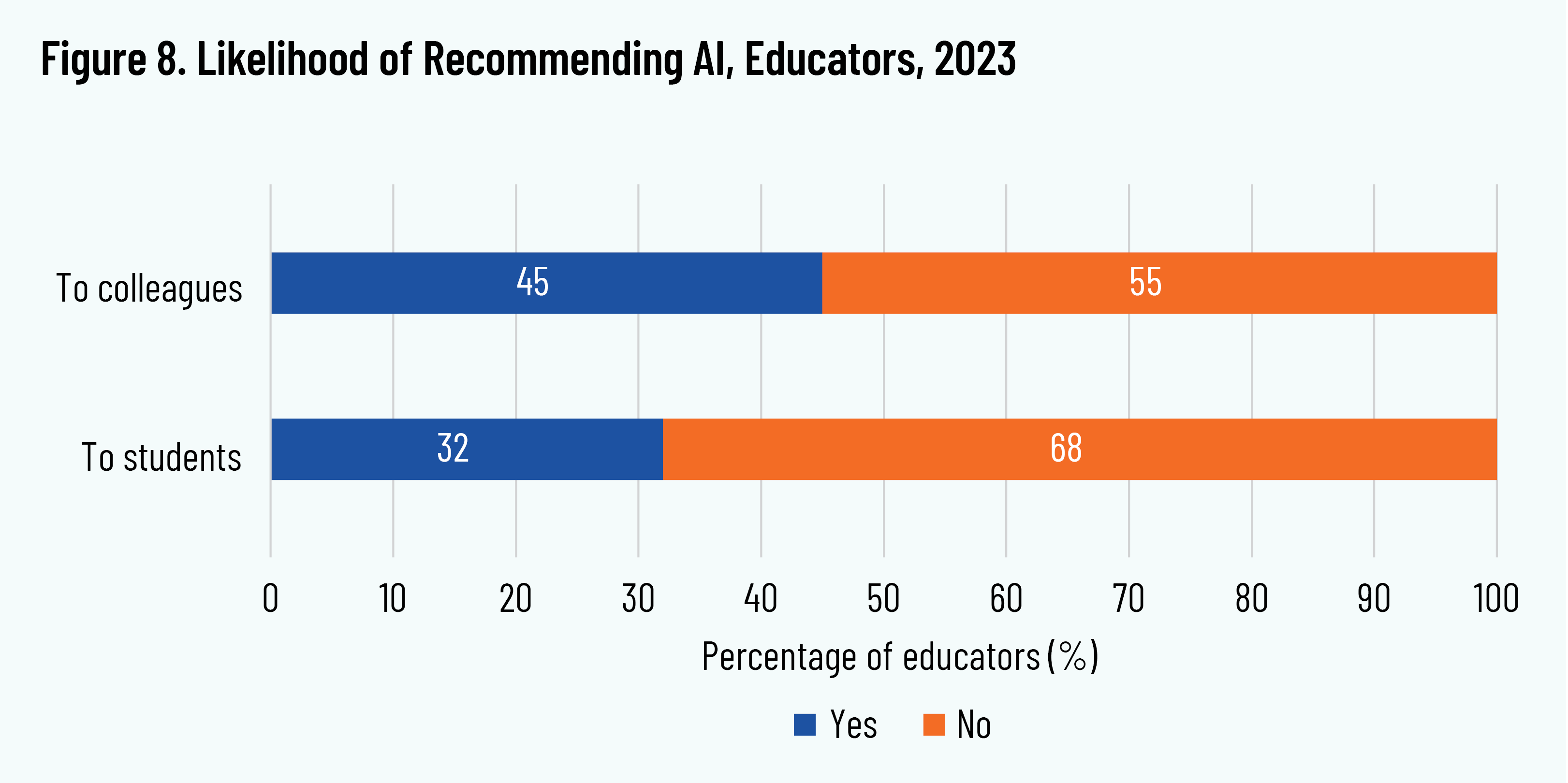

Educators viewed the use of AI differently for themselves than for their students. When asked to gauge the likelihood of their recommending AI tools, they indicated that they were more likely to recommend to colleagues than to students, at 45 percent and 32 percent, respectively (figure 8).

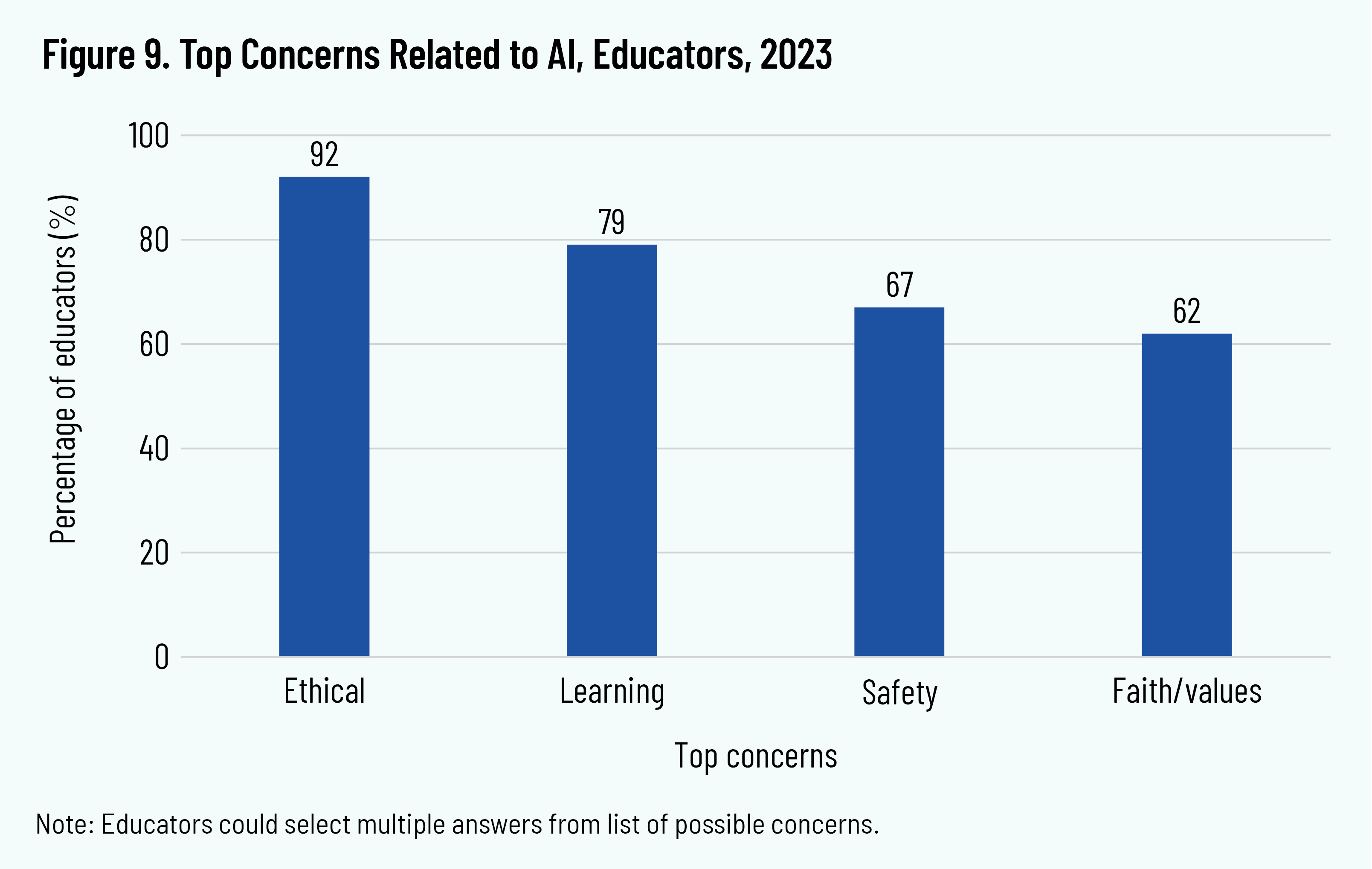

Two other data points may shed some light on this difference in the likelihood of recommending AI. When asked about specific benefits to teachers, most survey respondents agreed or strongly agreed that AI could help teachers save time and effort (87 percent) and develop more effective curriculum and lesson plans (60 percent)—thus reflecting a belief that AI has the capacity to make teaching easier and more effective. By contrast, educators expressed significant concern regarding student use of AI. The concerns most frequently selected from the list provided related to ethics (cheating or other unethical behavior), a negative impact on student learning (hindering the development of students’ creative and critical thinking skills), safety (potential hacking or monitoring without consent), and on students’ faith or values (figure 9).

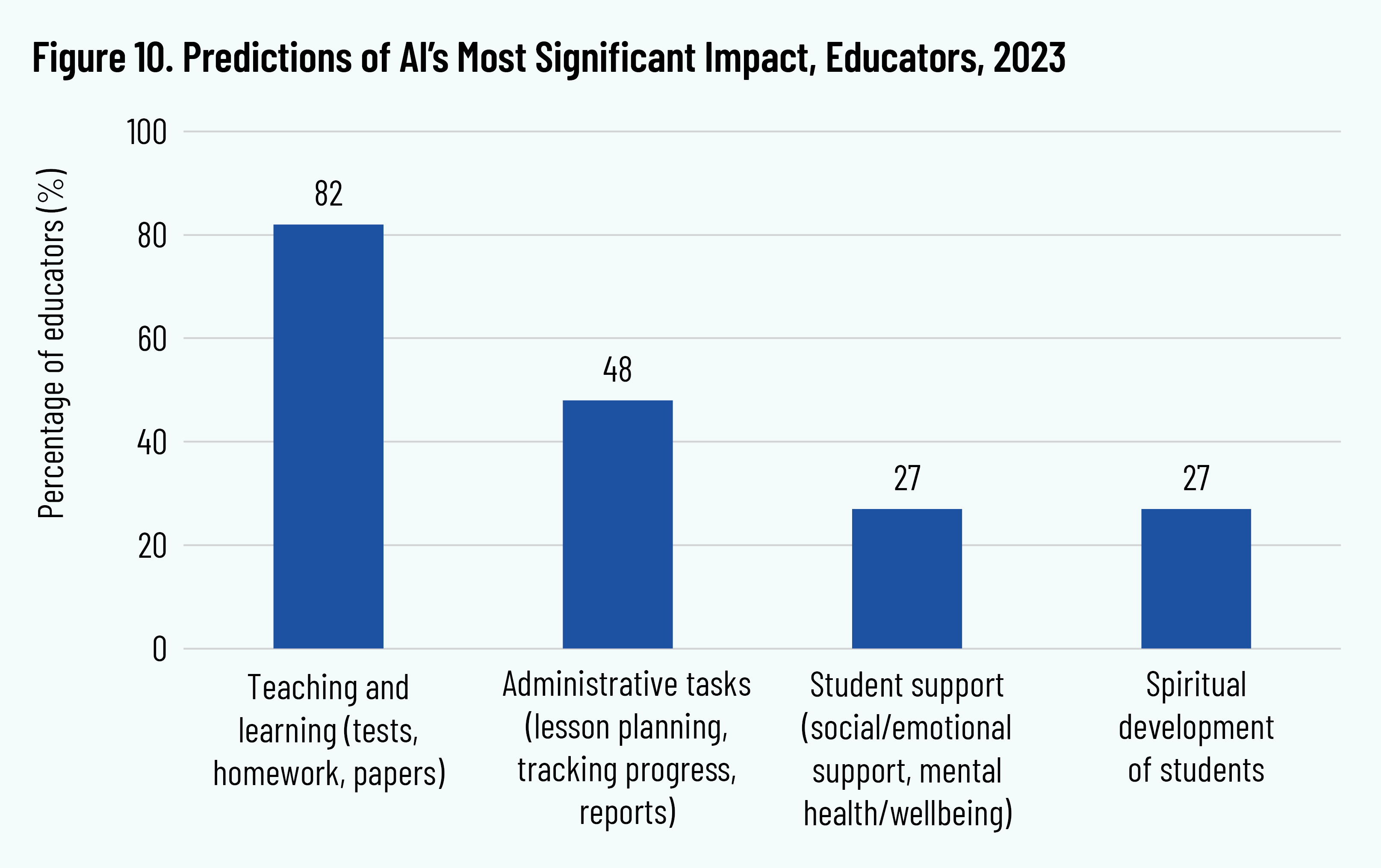

Despite these concerns, when asked to rank the aspects of schooling that they thought AI would have the greatest impact on in the future, a large majority (82 percent) of respondents chose teaching and learning as a top area. The next-highest area selected was administrative tasks, but at a distant second (48 percent), followed by student support services (27 percent) and student spiritual development (27 percent) (figure 10).

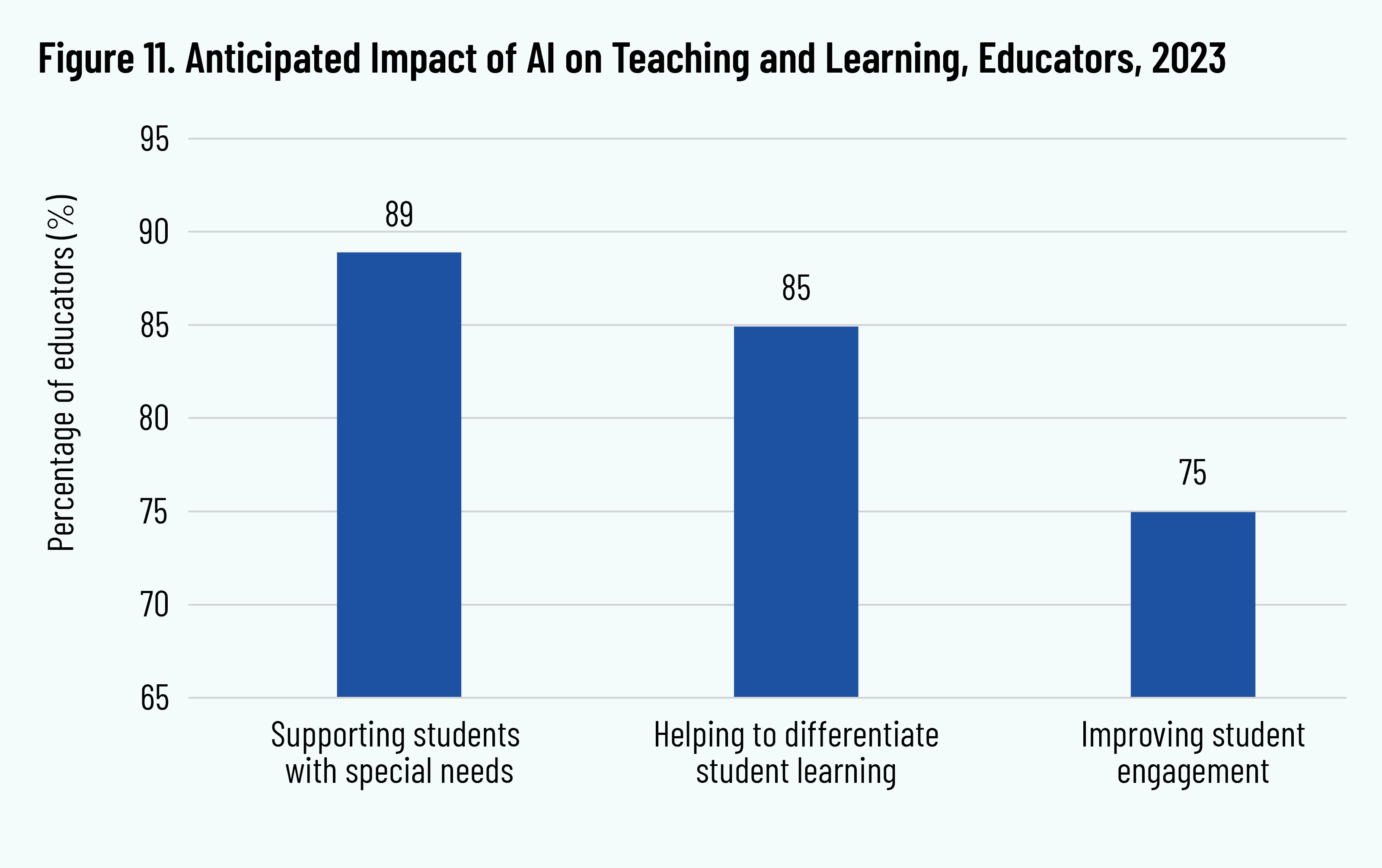

When asked about specific areas of teaching and learning that they anticipate AI will have a significant impact on, the top areas chosen were supporting students with special needs (89 percent), helping to differentiate student learning (85 percent), and improving student engagement (75 percent) (figure 11).

Early Adopters of AI

Taken together, the survey data may suggest that the Christian school sector is in the early stages of AI adoption, with most educators reporting low levels of familiarity, usage, and confidence in using it effectively. Similarly, most respondents (around two-thirds) indicated that their schools are not incorporating AI in teaching and learning. These levels contrast with educators’ prediction that teaching and learning will be the areas of schooling most impacted by AI in the future.

These data appear to concur with the assertion of many technologists and researchers 8 8 M. Grubbs, “The Inflection Point of Generative AI,” LinkedIn, August 2, 2023, https://www.linkedin.com/pulse/inflection-point-generative-ai-michael-grubbs; U. Nasir, “The Mass Adoption of AI: A New Sigmoid Curve?” Medium, February 20, 2023, https://medium.com/geekculture/the-mass-adoption-of-ai-a-new-sigmoid-curve-c86cffe1617; G. Scriven, “Generative AI Will Go Mainstream in 2024,” The Economist, November 13, 2023, https://www.economist.com/the-world-ahead/2023/11/13/generative-ai-will-go-mainstream-in-2024. that generative AI is at the beginning of the technology S-curve. 9 9 R.N. Foster, Innovation: The Attacker’s Advantage (New York: Summit Books, 1986). At this initial stage, early adopters are readily incorporating it into their practice, but the majority of potential users are not yet familiar or comfortable enough with the technology to adopt it (and some may choose not to adopt AI at all). AI in education also appears to be in the second stage of the Gartner Hype Cycle, a five-stage model of the adoption of new technology. 10 10 B. Kanter, A. Fine, and P. Deng, “8 Steps Nonprofits Can Take to Adopt AI Responsibly,” Stanford Social Innovation Review, September 7, 2023, https://ssir.org/articles/entry/8_steps_nonprofits_can_take_to_adopt_ai_responsibly. During this stage, known as the “Peak of Inflated Expectations,” early publicity results in stories of both success and failure, with most practitioners unsure of whether to adopt the new technology.

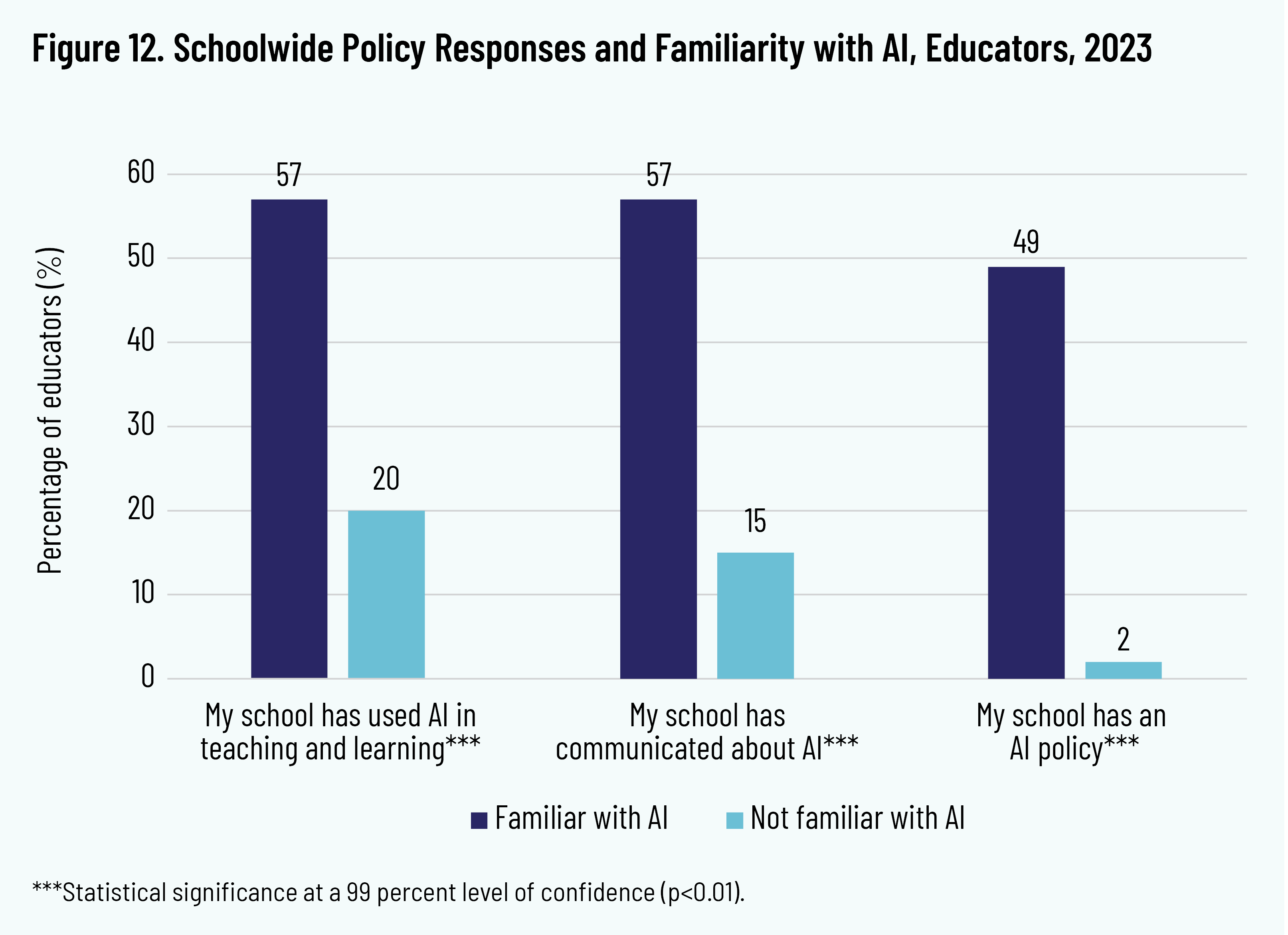

Data analysis for this survey identifies characteristics of educators and schools that correlate with higher levels of current AI adoption. No causal claim can be made, but these analyses yield the following statistically significant correlations. 11 11 On the graphs that follow in this section, we report statistical significance levels for each result where *** shows statistical significance at a 99 percent level of confidence (p<0.01), ** represents a 95 percent level of confidence (p<0.05). First, there is a positive relationship between higher levels of educator familiarity and working at a school that has taken deliberate steps to respond to the rise of AI, such as implementing a policy on the use of AI or communicating about AI with teachers, parents, and students. Second, there are generally more favorable views of AI among school leaders (versus teachers), urban school educators (versus suburban), and educators at missional (versus covenantal) schools.

School Responses and Educator Familiarity

Perhaps unsurprisingly, correlations were found between school responses to AI and educators’ level of familiarity with AI, after controlling for a range of variables (figure 12). Educators who are familiar with AI are more likely to work in a school that uses AI in teaching and learning than educators who are not familiar with AI, by about 37 percentage points (p<0.01). Similarly, educators who have more familiarity with AI are almost four times more likely to be in schools that have communicated about AI to their teachers and students than their counterparts who are not familiar with AI (p<0.01). Thirdly, schools that have communicated about AI to parents and students are more likely to set policies on AI for students, by about 47 percentage points (p<0.01) compared to schools that have not communicated about AI to the parents and students.

This study does not answer the question of which came first, a school’s response to AI or its educators’ familiarity with AI. It also does not explain whether this relationship is reciprocal, with school responses to AI bolstering educator familiarity with AI and vice versa. It does show, however, that a positive relationship exists between the two—that schoolwide engagement with AI is connected to Christian school educators’ familiarity with AI.

Leaders More Favorable than Teachers

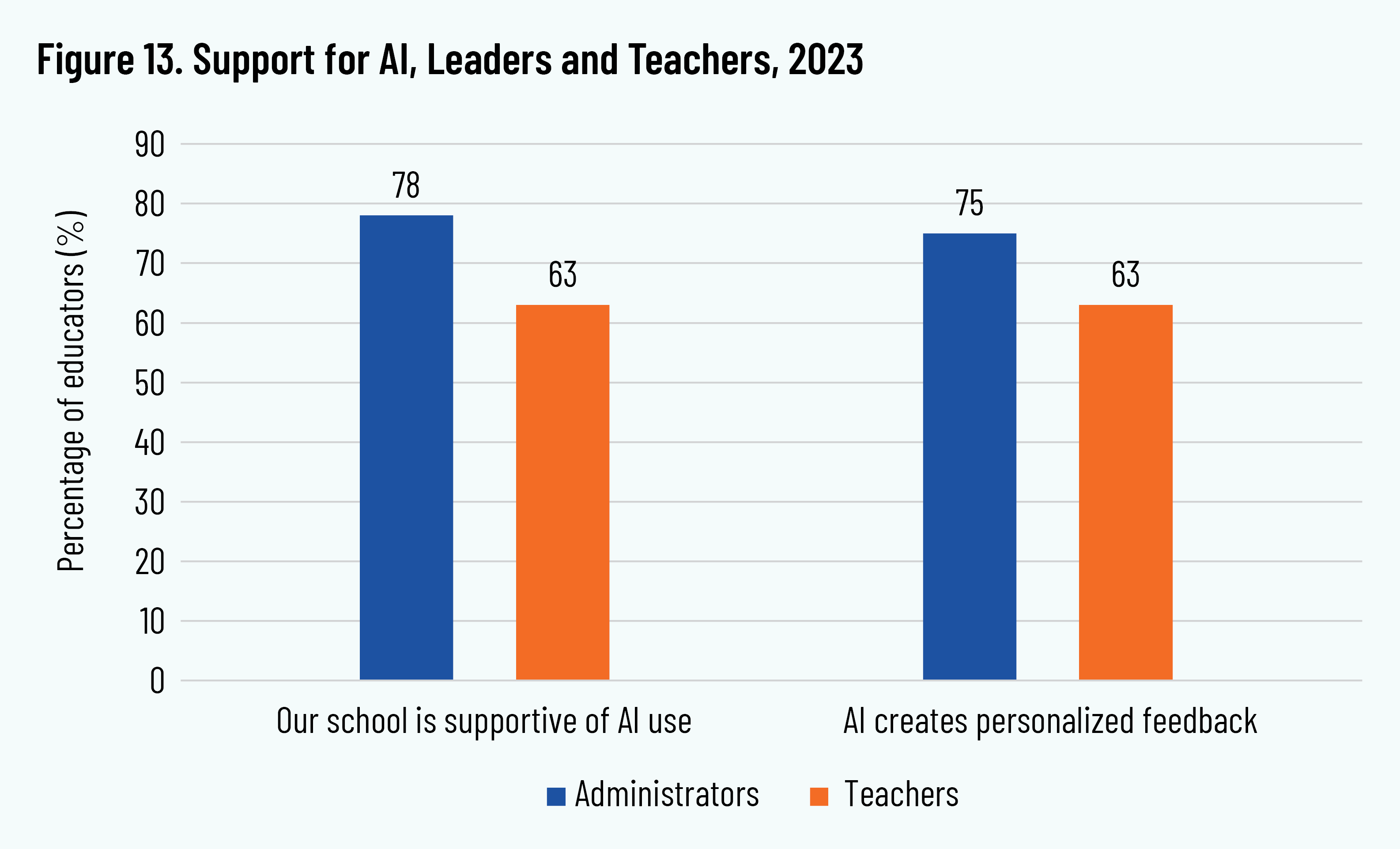

This study finds that leaders are more favorable about AI than teachers, in two areas, first, when it comes to agreeing that they are supportive of AI use (78 percent for leaders, versus 63 percent for teachers), and second, in the opinion that AI creates the opportunity for individualized feedback for students (75 percent for leaders versus 63 percent for teachers) (figure 13).

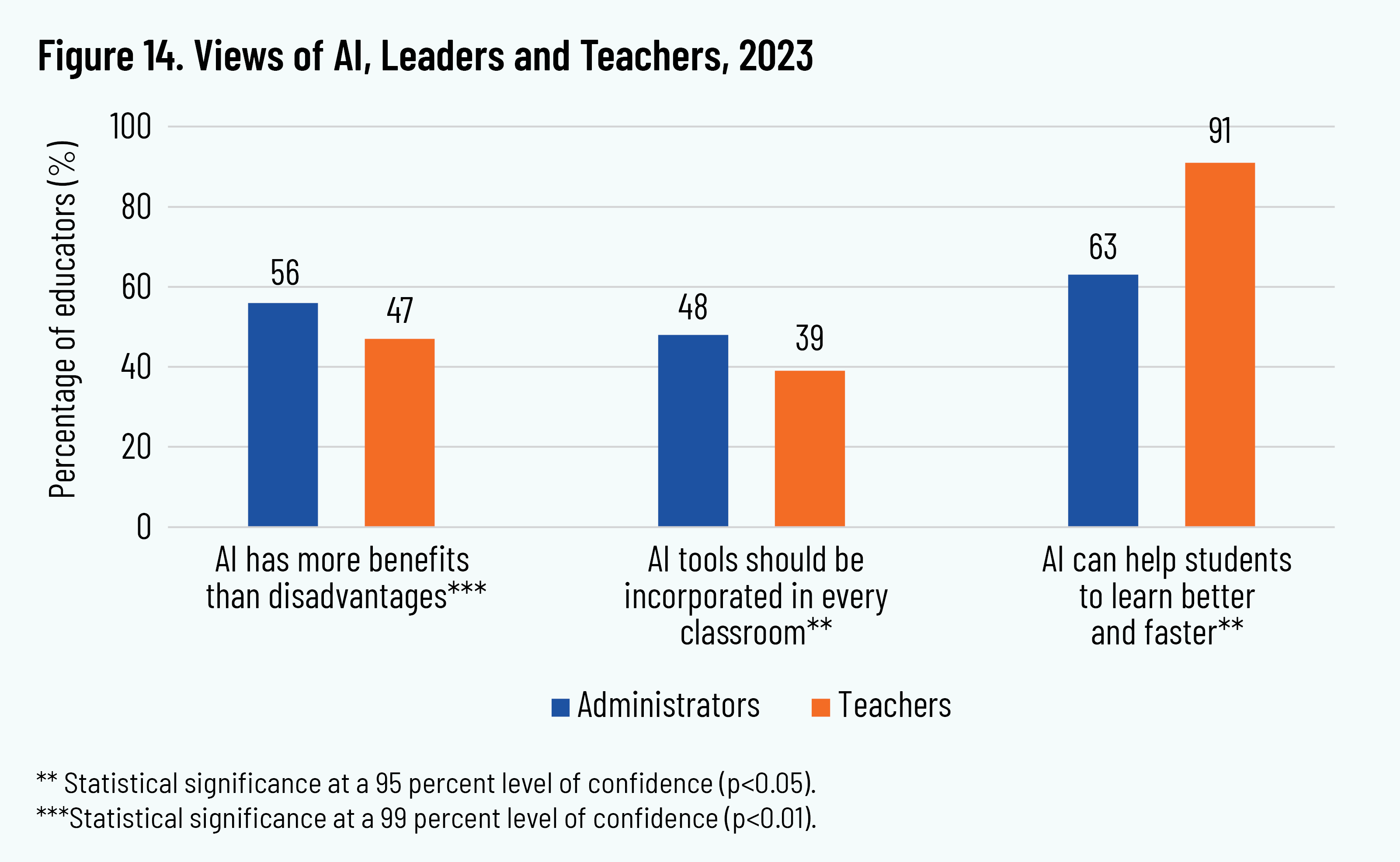

A statistically significant difference was also observed between leaders and teachers regarding their views of AI. These views tended to be more favorable among leaders than among teachers. Specifically, after controlling for certain demographic and school variables, leaders are more likely than teachers to agree that AI has more benefits than disadvantages, by nine percentage points (p<0.01). Teachers showed lower agreement (39 percent) than leaders (48 percent) as to whether AI should be incorporated in every classroom (p<0.05). Curiously, however, teachers were far more likely than leaders to agree that AI can help students learn better and faster, by a difference of 28 percentage points (p<0.05). This is the only area in which teachers were found to have a more favorable view of AI than leaders (figure 14).

When these results are taken together, they suggest that leaders are more eager than teachers to see AI used in teaching and learning. There appears to be a gap between administrator and teacher support for AI, with implications for leadership in Christian schools—namely that leaders seeking to adopt AI in their schools will need to address faculty reluctance toward AI, perhaps through training or other means that increase teacher awareness and comfort with AI.

Urban School Educators More Favorable than Rural

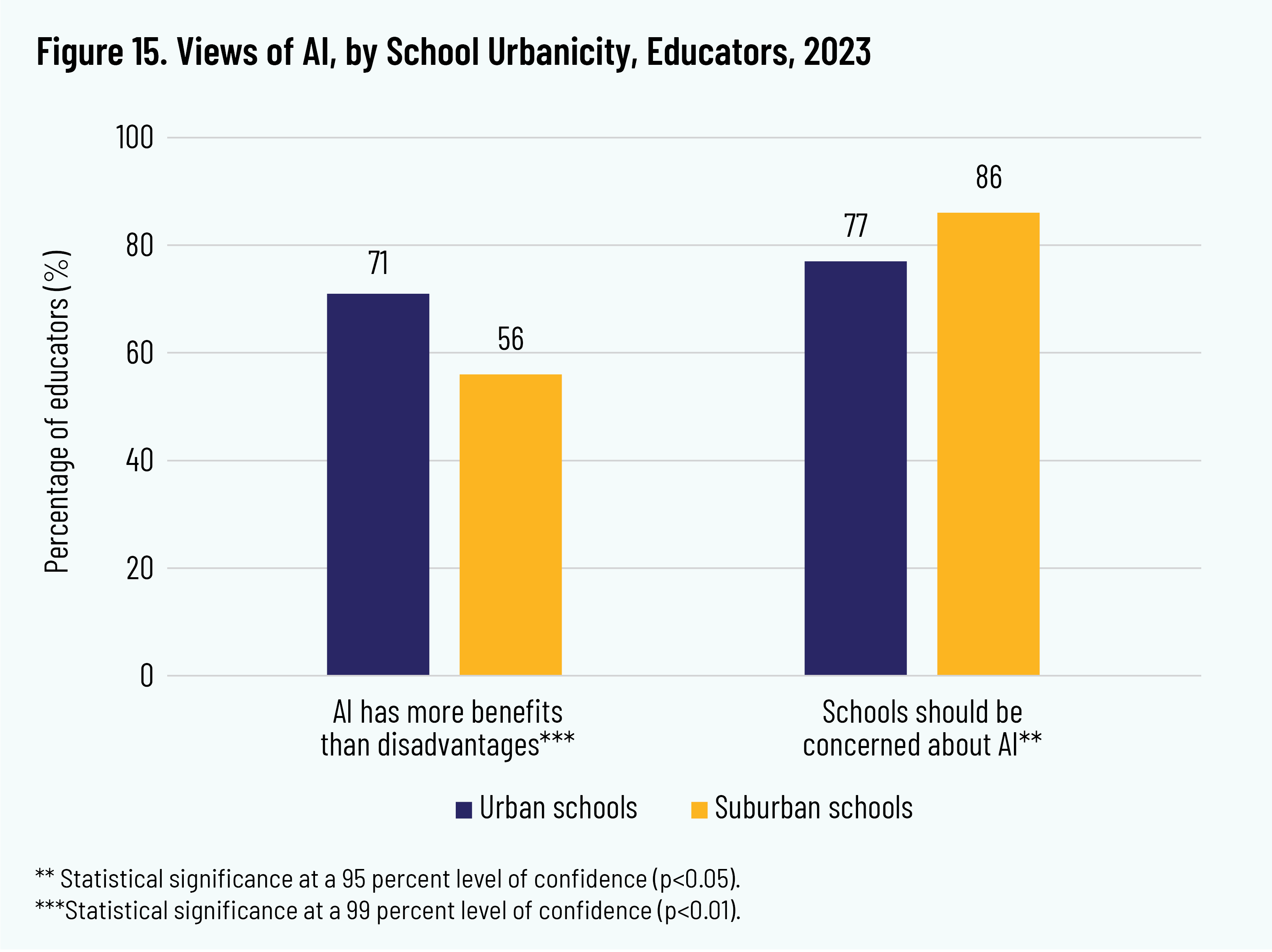

On average, compared to suburban educators, educators in urban schools are more likely to agree, by about 15 percentage points (p<0.01), that AI has more benefits than disadvantages. Similarly, after controlling for certain other variables, educators in suburban schools are more likely than those in urban schools to agree that Christian school educators should be concerned about the rise of AI technology for students, by a difference of about nine percentage points (p<0.05) (figure 15).

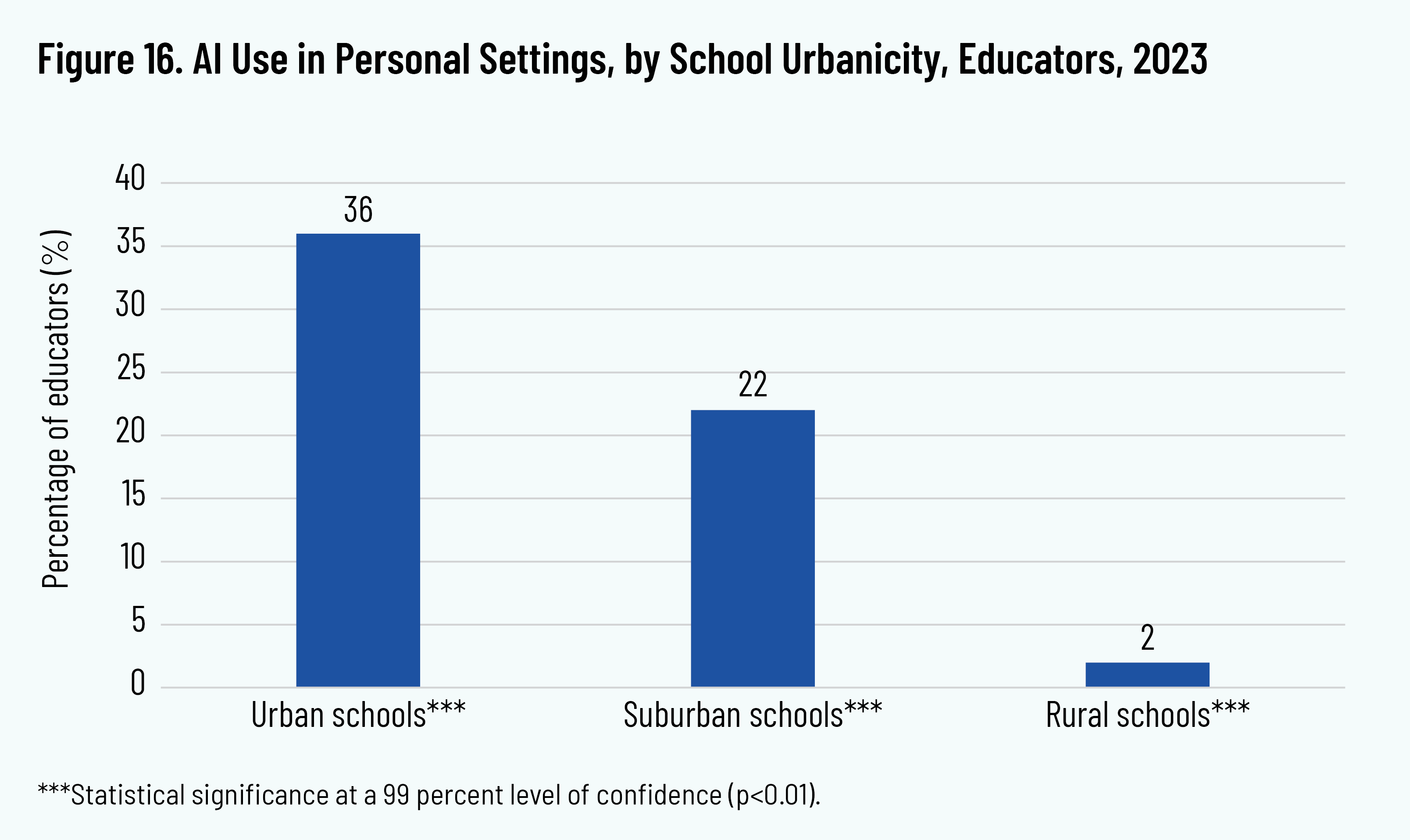

A correlation was found between educators’ personal use of AI and their school’s urbanicity. Urban educators reported using AI with more frequency in personal settings than suburban educators did, by 14 percentage points (p<0.01). Moreover, a large difference is observed when comparing rural educators and suburban educators: rural educators are less likely than suburban educators to say that they use AI in their personal settings, by about 20 percentage points (p<0.01) (figure 16).

Despite these findings, no correlation was observed between urbanicity and the use of AI for work purposes. When it comes to personal use of AI, however, urban school educators are more likely to be early adopters, followed by suburban school educators.

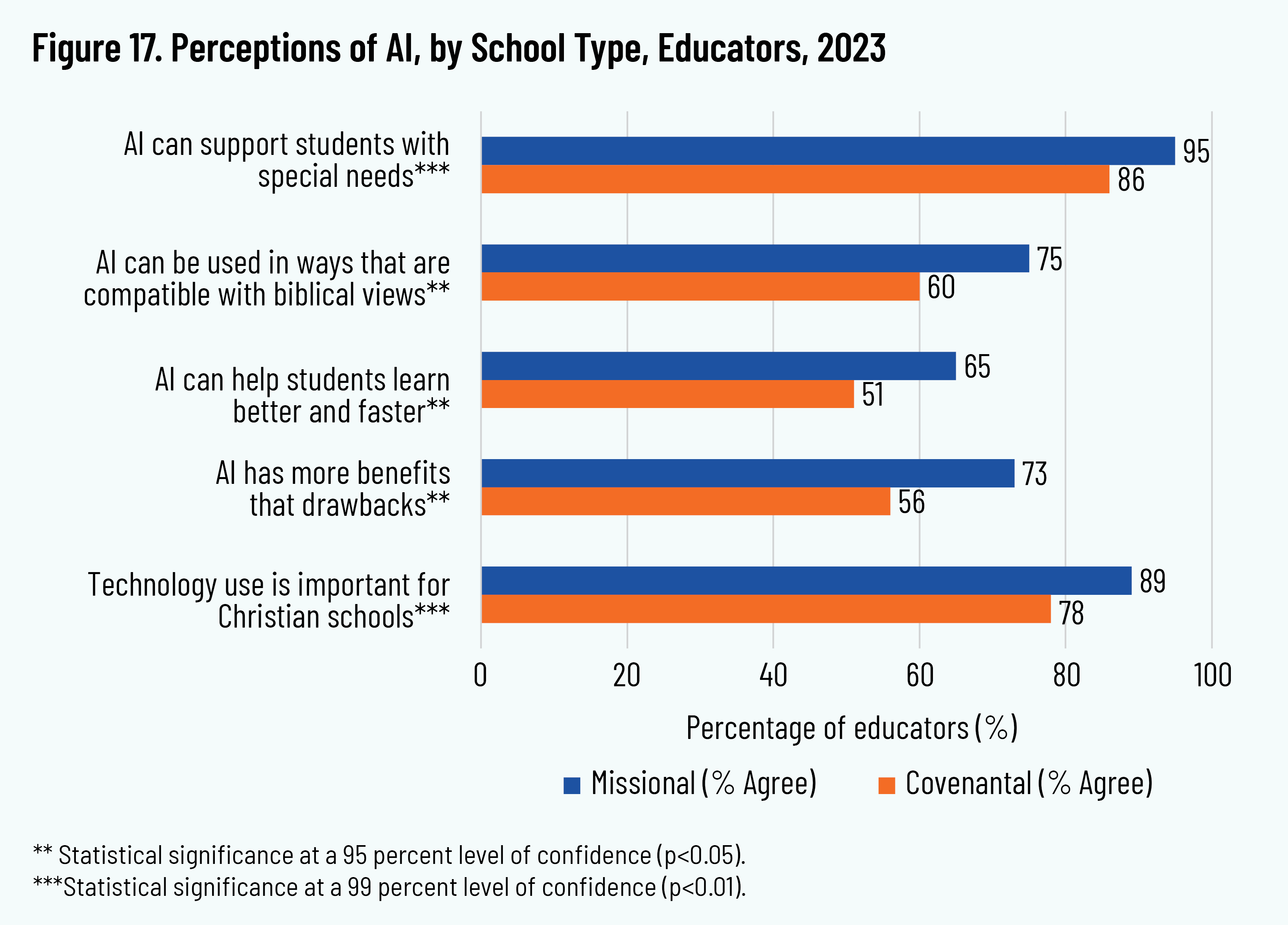

Missional Schools More Favorable than Covenantal Schools

Educators at missional schools (those that do not require parents to attend church or sign a statement of faith) had significantly more favorable views of AI than did educators at covenantal schools (those requiring parents to be active members of a church and/or to sign a statement of faith upon the admission of their children to the school). Specifically, educators at missional schools were more likely to affirm that technology is important for Christian schools (89 percent, versus 78 percent for those at covenantal schools), that AI has more benefits than drawbacks (73 percent, versus 56 percent), that AI can help students learn better and faster (65 percent, versus 51 percent), that AI can be used in ways that are compatible with biblical views (75 percent, versus 60 percent), and that AI can support students with special needs (95 percent, versus 86 percent) (figure 17). On average, educators at covenantal schools were four times more likely than those at missional schools to have banned student use of AI (p<0.01).

More research is needed to understand these observations related to missional school educators’ more favorable views of AI. One possibility, however, is that positive use cases for AI in instruction may be more readily identifiable among missional schools.

Discussion

Although increasing the effectiveness of teaching and learning should be a perennial aim in schools, the question of whether to adopt AI is not just one of utility. Brue, Schuurman, and Vanderleest (2022) suggest that “the ultimate and proper goal for technology [is] to help us be more fully human in relationship to each other and to God.” 12 12 E.J. Brue, D.C. Schuurman, and S.H. VanderLeest, A Christian Field Guide to Technology for Engineers and Designers (Westmont, IL: InterVarsity Press, 2022), 11. To be sure, the question of how to achieve this goal—along with deciding whether or how to adopt AI technologies in Christian schools—is a complex one.

To assist educators in this effort, the following three-lens framework is proposed for catalyzing conversation about AI in Christian schools. These lenses are non-discrete and overlapping, and they can be used together to frame discussions and planning about AI. These three lenses—the use lens, the human lens, and the mission lens—along with suggested reflection questions, can be used by leaders, teachers, students, and the school community together as they consider AI.

The Use Lens

Every field, whether education, law, medicine, insurance or any other, is faced with new AI-driven or supported technologies that are impacting their current work and will shape their fields for the future. This is often referred to in terms of developing “use cases” for these technologies, with an eye toward how they can help to improve performance (as mentioned earlier, in the case of education, the current use case discussion is mostly about ChatGPT and related tools).

Employing the use lens, Christian schools can consider the following questions:

- How can we increase our knowledge as a staff about AI (through readings, training, conferences, certificate programs, etc.)?

- Can we create small-scale experiments or pilots using AI in teaching and learning, from which we can learn without significant risk?

- How can we network with other schools to identify use cases or to collaborate on AI experiments, policies, or pilots?

- How can we effectively engage with all important stakeholders (leaders, teachers, parents, students, others) in discussions and decisions about the use of AI (whether through a task force or other method)?

The Human Lens

Many theologians, public intellectuals, technologists, and ethicists are concerned with the impact of AI on humanity. This second human lens centers on the profound question of how AI may shape human nature and human experiences writ large. Fundamental to answering this question is one’s view of what it means to be human, from which flows one’s ethical reasoning about technology. John C. Lennox, Oxford professor and author of 2084: Artificial Intelligence and the Future of Humanity, makes the argument that while most technologies in and of themselves are values-neutral, the human question (of how humans use technology) is what imbues technological trajectories with an ethical dimension. As Lennox writes, “Of course, experience tells us that most technological advances are likely to have both an upside and a downside. . . . It is the same with AI. There are many valuable positive developments, and there are some very alarming negative aspects that demand close ethical attention.” 13 13 J.C. Lennox, 2084: Artificial Intelligence and the Future of Humanity (Grand Rapids, MI: Zondervan Reflective, 2020), 54. Thus, while connected to the use lens, the human lens goes a step further to consider the “why” and the “whether,” not just the “how” or “what” of AI.

Using a human lens, Christian schools can consider the following questions:

- What is our theological framework for understanding the nature of human beings (for example, as created in God’s image), and what are the implications of that framework for understanding human inventions and advances (such as AI)?

- What is our educational purpose or philosophy? How are we trying to form our students as human persons? How might the use of AI in our schools enhance or detract from this purpose?

- How do we address ethical thinking at our school, especially when it comes to complex issues in society such as AI technology?

- Do we offer students opportunities to wrestle with contemporary ethical issues (such as through reading, considering opposing viewpoints, and debating)?

- What training and support do our faculty need to help our students do this thinking well?

The Mission Lens

People from any faith background or none can engage with questions about AI from human or use lenses, but the question of how AI can be viewed through the lens of Christian mission is, of course, of importance to Christians inhabiting this moment in history. Both the Great Commission (Matthew 28:16–20) and Great Commandment (Matthew 22:34–40) have implications for the use of any technology: they lead Christians to ask whether, and if so how, AI can help to spread the Gospel, make disciples, and enable us to better love and serve our neighbors. This is the latest in a long line of similar questions regarding new technologies, dating at least as far back to the 15th century when Christians considered uses for the printing press (with the result that from the Gutenberg Bible to today, the Bible is the most printed book in history). This third lens certainly overlaps with the first and second, and while Christians should consider both, they have a unique obligation to pay attention to this lens as well.

Using a mission lens, Christian schools can consider the following questions:

- What is our school’s theological view of how Christians should engage the world? How does that view inform teaching, learning, and discipleship at our school?

- Given this theological view, along with our school’s mission, how can we evaluate the potential of AI for our school—including whether or how AI can be used to nurture Christian beliefs and values, and service to others?

- How might our school winsomely engage faculty, parents, students, or other constituents who hold different theological views of AI?

- What resources can we draw upon (books, speakers, webinars, etc.) that address AI from a distinctly Christian view?

Conclusion

This study yielded descriptive data on AI adoption among over 700 Christian school leaders and teachers. The study found that on nearly all measures (schoolwide use of AI, as well as educator use and confidence level), less than a third of schools and educators report that their schools used AI in teaching and learning. When exploring the factors that are correlated with early adoption of AI, more favorable views of AI are found among leaders (versus teachers), greater usage among educators at schools that have taken steps to adopt or address AI, and correlations between demographic factors with more favorable views of AI (greater urbanicity, as well as missional versus covenantal admissions policies).

The tripart framework of use, human, and mission lenses can provide a starting point for engaging the entire school community in dialogue and strategy about AI. While AI may differ in significant and potentially profound ways from new technologies that have preceded it, Christian school educators can take encouragement in remembering—as well as applying learnings from—previous waves of technological change that they have navigated. When it comes to deciding whether or how to engage AI, they will need thoughtfulness and intentionally to chart a deliberate course into the future.

References

Brue, E.J., D.C. Schuurman, and S.H. VanderLeest. A Christian Field Guide to Technology for Engineers and Designers. Westmont, IL: InterVarsity Press, 2022.

Cheng, A, R. Djita, and D. Hunt. “Many Educational Systems, A Common Good: An International Comparison of American, Canadian, and Australian Graduates from the Cardus Education Survey.” Cardus, 2022. https://www.cardus.ca/research/education/reports/many-educational-systems- a-common-good/.

Foster, R. Innovation: The Attacker’s Advantage. New York: Summit Books, 1986.

Gordon, C. “How are Educators Reacting to ChatGPT?” Forbes, April 30, 2023. https://www.forbes.com/sites/cindygordon/2023/04/30/how-are-educators-reacting-to-chat-gpt/.

Grubbs, M. “The Inflection Point of Generative AI.” LinkedIn, August 2, 2023. https://www.linkedin.com/pulse/inflection-point-generative-ai-michael-grubbs/.

Kanter, B., A. Fine, and P. Deng. “8 Steps Nonprofits Can Take to Adopt AI Responsibly.” Stanford Social Innovation Review, September 7, 2023. https://ssir.org/articles/entry/8_steps_nonprofits_can_take_to_adopt_ai_responsibly.

Lennox, J.C. 2084: Artificial Intelligence and the Future of Humanity. Grand Rapids, MI: Zondervan Reflective, 2020.

Montenegro-Rueda, M., J. Fernández-Cerero, J.M. Fernández-Batanero, and E. López-Meneses. “Impact of the Implementation of ChatGPT in Education: A Systematic Review.” Computers 12, no. 8 (2023): 1–13. https://doi.org/10.3390/computers12080153.

Nasir, U. “The Mass Adoption of AI: A New Sigmoid Curve?” Medium, February 20, 2023. https://medium.com/geekculture/the-mass-adoption-of-ai-a-new-sigmoid-curve-c86cffe1617.

Scriven, G. “Generative AI Will Go Mainstream in 2024.” The Economist, November 13, 2023. https://www.economist.com/the-world-ahead/2023/11/13/generative-ai-will-go-mainstream-in-2024.